BrightEdge Crawler

What is BrightEdge Crawler?

The BrightEdge Crawler bot is an automated tool used by BrightEdge, a company specializing in search engine optimization (SEO) and content performance analytics. This bot systematically navigates websites to collect data on web pages, which is then analyzed to provide insights into SEO performance, keyword rankings, and content optimization strategies. The crawler mimics the behavior of search engine bots to understand how a website is indexed and ranked by major search engines. By doing so, it helps businesses optimize their online presence and improve visibility in search engine results. The data collected by the BrightEdge Crawler is crucial for generating reports that guide digital marketing strategies, allowing companies to enhance their content’s effectiveness and reach. It operates within the guidelines of the robots.txt protocol, respecting website owners’ preferences regarding which parts of their site can be crawled.

Why is BrightEdge Crawler crawling my site?

BrightEdge Crawler is visiting your website primarily for SEO analysis purposes. It aims to gather data on your site’s structure, content, and performance metrics to help its clients optimize their digital marketing strategies. By crawling your site, BrightEdge can assess how well your pages are optimized for search engines, identify potential improvements, and track keyword rankings. This information is valuable for businesses looking to enhance their online visibility and competitiveness. Additionally, the crawler may be used to monitor changes in your site’s content or structure over time, providing ongoing insights into SEO performance.

How to block BrightEdge Crawler?

1. Robots.txt File: Add a specific directive in your robots.txt file to disallow the BrightEdge Crawler. Use the following syntax:

User-agent: BrightEdge Disallow: /

This instructs the crawler not to access any part of your website.

2. IP Address Blocking: Identify the IP addresses used by BrightEdge Crawler and block them at your server or firewall level. This method requires regular updates as IP addresses may change.

3. User-Agent Filtering: Implement server-side filtering based on the user-agent string. Configure your server to deny requests from user agents that match BrightEdge.

4. Web Application Firewall (WAF): Use a WAF to create rules that specifically block requests from BrightEdge Crawler based on its user-agent or IP address patterns.

5. CAPTCHA Implementation: Introduce CAPTCHA challenges for non-human visitors. While this may not directly block the crawler, it can deter automated access by requiring human verification.

6. Rate Limiting: Configure rate limiting on your server to restrict the number of requests from a single IP address or user-agent within a specific timeframe, potentially slowing down or deterring crawlers.

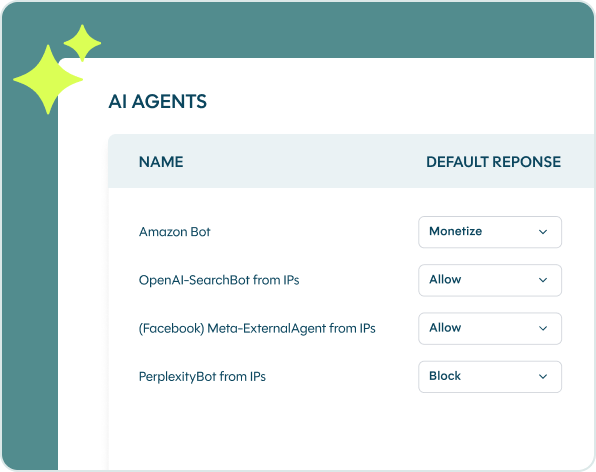

Block and Manage BrightEdge Crawler with DataDome

Fingerprint and detection data provided by DataDome. Naming conventions and descriptive summaries may be partially generated by LLMs.

How exposed are you to AI-driven fraud?

Get immediate access to the DataDome platform to assess your domain’s defenses and uncover exposed subdomains. Because knowing what you’re up against is the first step.